Recently a Twitter user posted a project they had been working on where they implemented a multi-dimensional array to store frames of a movie. They used the variable int ****video to store this data, and were immediately mocked by software Twitter.

int ****This is definitely the wrong way to do multi-dimensional arrays, but I’ve also seen material, even textbooks, that incorrectly teach it this way. I can easily imagine the above-mentioned Twitter user was simply taught wrong. (In fact, I think it’s very likely.) So today I want to talk about the correct way to do multi-dimensional, dynamically sized arrays in C and why. In the process, hopefully I’ll show you some of the deep, dark magic underlying this programming language.

This post will be aimed at a beginner in C or C++. I am expecting you to know how to write “hello world” and what a type is, but not much beyond that. Also, I’ll explicitly be talking about C, not C++. But most of the lessons learned apply to both languages.

Note that in this post I’ll be taking advantage of the excellent online compiler explorer. Its an online compiler and a great tool for both trying code out and seeing what it actually compiles to. Check it out.

What are those * things?

In C, int* is a pointer type, i.e., an object that points to a place in memory. In this case, that place holds an integer. A double* would point to a place in memory that holds a double. If you print a pointer, it’s a giant number indicating the address in memory that it’s pointing to. For example:

Here pa is a pointer to a. The & operator gets the address of a variable. The size I print in sizeof is the number of bytes the variable takes up. The code above is interactive, by the way. Try playing with it.

Now, how do we actually use a pointer? We can dereference a pointer with the * operator again to access the original variable:

(By the way, in the above interactive compiler, try printing pa[0] instead of *pa. And think to yourself about why it behaves the way it does. We’ll come back to that.)

One reason this is useful is because it lets us modify variables where we might not have access to them. For example, what if we wanted to make a function that did something to a variable? For example, we could iteratively compute the Fibonacci sequence with pointers like this:

This is a trivial example–we didn’t need a function for this–but I think it shows what pointers are capable of. They’re especially useful if you have more complex data structures, such as structs, trees, linked lists, etc. If you’re familiar with C++ (or basically any modern language) and references or passing by reference, this may sound familiar. I’ll tell you a secret: a reference is secretly a pointer where the language hides the dereference operation from you.

Pointers to pointers

So now we know what int* is. What’s int**? Well, you can point at anything, even a pointer. So int** is a pointer to a pointer. We can create and dereference one just like we would a pointer to an integer:

Why would you ever want to do this? Well, usually you wouldn’t. Pointers to pointers in this way is hard to read and hard to debug. That’s why the Twitter post that inspired this blog post got mocked. Nevertheless, people do use it. I will explain the reasons why (and hopefully I’ll convince you not to give in to temptation). But first we have to talk about arrays.

Static arrays

One of the first things one learns in C is how to declare an array. For example, a length 5 array of integers might be declared as

int a[5] = {4, 5, 6, 7, 8};As you probably guessed, this declares a length 5 array containing the numbers 4 through 8 inclusive. It’s a collection of fixed size. (That’s why it is static. The size is known at compile time.) You can access an element of a with the [] operator. For example, the middle element of the array (which we set to 6) might be accessed as

printf("The middle element of a is %d\n", a[2]);which would print 6. Arrays in C are zero-indexed, meaning 6 is the second element (counting from zero) of the array a.

A common novice mistake would be to try and print the array itself. Let’s see what happens when we try that:

Well, that’s interesting! What’s going on here? Does it look familiar? Maybe like the address we printed out from a pointer above? Arrays in C are pointers. And we can in fact interact with them that way. What happens when we dereference the array in our example?

Hey, look, it’s the element at the start of the array! Now I’m going to show you a neat trick:

It’s the middle of the array, the second element in fact. What we’ve just shown is that a[i] is the same as *(a+i). What’s going on here? Well, up until now, I’ve hidden two key facts from you:

- When you create an array, it allocates enough memory to store the number of elements it contains, and it puts them next to each other in memory. The third element of

ais literally next to (“to the right of”) the second. - You can do arithmetic on pointers. Adding 1 to a pointer “shifts it to the right” by the size of one object. Subtracting it “shifts to the left.”

As we already established, the array a is secretly both a contiguous block of memory and a pointer that points at the first element of that block. By adding two to it, we shifted the pointer two elements to the right, and now we’re pointing at the middle of the array!

So now I have a pop quiz for you. What does 2[a] do? I’ve put an interactive compiler explorer window below with a[2]. After you’ve thought about it, try changing this to 2[a] and test your hypothesis.

Were you right? Disturbing, isn’t it? What do you think is happening? Here’s a hint: We know that a[2] actually is *(a + 2). And we know that addition is commutative, i.e., 3+4 is the same as 4+3.

A short aside on strings

Strings are strings of text. In C they’re created as arrays of chars (alphabet symbols). For example,

char name[] = "Jonah";where here I’ve neglected to specify the size of the array, as the compiler can figure it out from the right-hand side of the equals sign. Strings in C are null-terminated, meaning they have a secret final character that tells you that the string is over. Let’s see for ourselves:

The first printf line uses the %s format string to print the string. The second loops over the array and prints the characters individually. The third one prints out the integer encoding of the characters. They’re integers under the hood, just interpreted as letters of the alphabet. That last one, the zero, is the null terminator that tells you the string has ended. Let’s use the null terminator, and what we know about pointers, to write our own function that prints a string:

This is how printf("%s", name) works under the hood. I bring this up not because it’s particularly relevant for this discussion, but because I think it’s fun. Also, lots of old C code that processes text does loops like this, where it iteratively adds to the pointer rather than incrementing an index. C++ even generalized this idea. This is essentially what iterators are in the C++ standard library.

Arrays of dynamic size

Now let’s return to our original discussion. So far, all the arrays we’ve talked about have been of fixed size, a size you had to choose at compile time. What if we want to be able to change the size at run time? That’s where malloc (defined in the stdlib.h header) comes in. We already established that arrays are pointers. malloc provides a way to request an arbitrary amount of memory and point at it. The call

int *a = (int *)malloc(num_elements*sizeof(int));requests an amount of memory capable of containing num_elements integers and returns a pointer to the beginning of that block of memory. Some syntax details: malloc returns a pointer of arbitrary type (called a void pointer) and you must tell the compiler you want to point at integers. malloc also requests an amount of memory in bytes (8 bits). But most types are more than 8 bits. Integers, for example, are usually 32 bits (though sometimes they’re 64 bits depending on architecture). That’s why that sizeof call is needed.

Once we have a, we can treat it like any of the arrays we’ve already worked with. For example, we can access elements with the [] operator. One important detail, though, is that the memory requested from malloc doesn’t go away automatically when you’re done with it. You must release the memory with the free function via

free(a);If you don’t, your program will never release the memory. If you make a bunch of malloc calls but don’t call free, your program will request more and more memory from your operating system until you run out. If you’ve heard of a memory leak, that’s a memory leak.

For this reason, it’s considered good practice to minimize the amount of dynamic memory allocations you do in your code, and to ensure that every malloc call is coupled to a free call, ideally close by in your text file so you can see that they’re paired up.

Note that I show malloc here because it is by far the fasted and most widely used way to create dynamic memory in C. However, there are safer tools such as calloc and aligned_malloc that prevent some pitfalls of malloc so keep that in mind if you ever want to apply this in the real world.

A brief diversion on C++ language features

C++, which contains C, has alternatives to malloc and free called new and delete, which do interact with the language differently, but in ways beyond the scope of this blog post. However, you should almost never be working with new, delete, malloc, or free in C++ because the language provides safer alternatives that do more with less code. For example, the std::vector container is a dynamically sized array that cleans itself up when it’s done, and std::unique_ptr and std::shared_ptr will automatically clean up after themselves, negating the need for calls to free.

Multi-dimensional arrays the wrong way

We’re ready to talk about using int** and friends as multi-dimensional arrays. So far, all the arrays we’ve talked about are one-dimensional. How can we generalize? Well, the obvious (and wrong) way to generalize is to nest them. For example, we could make a two-dimensional array of dynamic size like this:

int **a = (int **)malloc(num_rows*sizeof(int*));

for (size_t i = 0; i < num_rows; ++i) {

a[i] = (int*)malloc(num_columns*sizeof(int));

}Then you could access, say, the second row and third column of a via a[2][3], which is intuitive. Note the size_t in num rows. In general it’s better to use size_t to represent array sizes and indices, as it is less likely to overflow.

Note, of course, that you have to free all that memory when you’re done! And because we have an array of arrays, we have to free the inner arrays before we can free the outer one.

for (size_t i = 0; i < num_rows; ++i) free(a[i]);

free(a);Otherwise, while a will no longer point at valid memory, the memory from, say, a[2] will never have been released. If you try to go the other way, what happens?

We accessed invalid memory and the operating system killed our program. That’s a segmentation fault.

Why are arrays like this bad?

malloc and free are dangerous, and we want to minimize their use. Pointers are also possible to interpret in multiple ways. Is int** a 2d array or a pointer to a pointer? And many * characters can make code difficult to read. Pointers can also be problematic on heterogeneous architectures like GPU systems.

Performance is another major consideration. malloc and free are slow. For technical reasons, it’s hard for the computer to provide you memory at runtime when you ask for it. So you want to avoid making malloc and free calls as much as possible. Let’s just do some quick profiling:

This mocks up the cost of allocating and freeing a 100×100 array, which isn’t huge. I compare that to a length 10,000 1D array, which is just one malloc. Tens of microseconds doesn’t sound like a lot, but it is absolutely an eternity on a computer. Repeated calls to malloc and free can also slowly grow your program’s memory footprint, due to how the operating system handles memory under the hood. (Note that this is not a fair profile. For example, profiling should be averaged over many iterations. There are also caching optimizations the computer is probably performing under the hood for the malloc/free of b.)

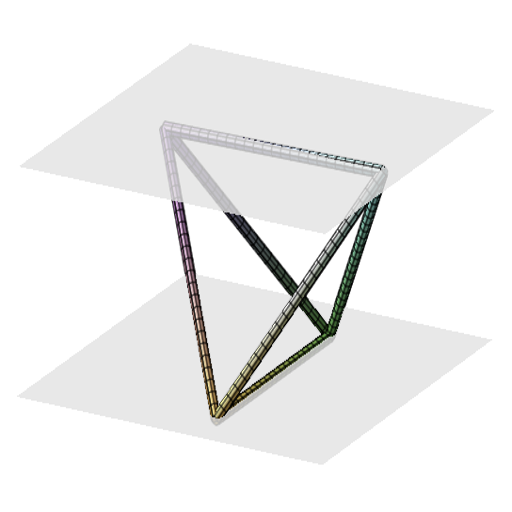

Another reason multi-dimensional arrays can be slow has to do with caching and vector performance. We think of CPUs as serial processors that operate on a block of memory one instruction after the other, but this is very much not the case. Below is a die image (essentially a photograph of a chip) of the Intel Meteor Lake architecture. Those different colored regions on the chip do different things. It’s far from a monolith.

In particular, modern CPUs have two features I want to highlight:

- Multiple memory subsystems. CPUs actually don’t typically interact directly with RAM (also called main memory). Instead, they pull your data into a much faster memory subsystem, called a cache. There are usually levels of cache. The faster the cache, the less memory it holds. Therefore, your code is faster if it can access data that is close together, because it stays in cache and the CPU doesn’t have to pull data from farther away in main memory.

- Vector lanes. If you’re doing the same operation many times on data that is co-located in memory, the CPU may vectorize it and operate on all of that data in parallel. It will do this automatically, although it can be finicky and you can also explicitly request vector operations.

Every time you call malloc, your computer may give you memory in a totally different location in main memory. Your data is not co-located, making cache misses more likely and vectorization less likely.

Multi-dimensional static arrays

Multi-dimensional static arrays (i.e., of fixed size) are a language feature; no work is required to make them. They’re declared like this:

int a[2][3];This creates a two-dimensional array (such as a matrix) which is 2 by 3. We can access elements again with the bracket operator. For example,

a[1][1]will access the (zero-indexed) first row and first column–in this case, the last row and middle column–of the array a. But recall how we said pointers are arrays. As it turns out, under the hood, C does think of statically defined 2D arrays as pointers of pointers, so we must de-reference twice. What happens if we index into a via pointer arithmetic, the way we did before?

Evidently **a points at the zeroth row and column, *(*a+1) points at the zeroth row and first column, and **(a+1) points at the first row and zeroth column, as we’d expect. But what about *(*a+3)?

It points to the first row and zeroth column. It wrapped around! Multi-dimensional statically sized arrays are contiguous in memory. Note that there’s a language-defined choice in the way it wraps around. C is so-called row-major ordered because it wraps by rows, while Fortran is column-major and it wraps by columns. That distinction isn’t important for now, though.

Multi-dimensional dynamically sized arrays, done right

So how can we mimic the behavior of statically sized arrays? I’m going to suggest three ways. All have their place, though the first one is the least useful.

Two mallocs but many pointers

Arrays are pointers, but as we discussed at the beginning, we don’t have to allocate memory when we assign a pointer. We can assign it to memory that has already been allocated. This leads to the first way we can mimic statically sized multi-dimensional arrays. We can pre-allocate all the memory we’ll need ahead of time, then use pointers to point to locations in this big block of memory:

int *data = (int*)malloc(NUM_ROWS*NUM_COLUMNS*sizeof(int));

int **a = (int**)malloc(NUM_ROWS*sizeof(int*));

for (size_t row = 0; row < NUM_ROWS; ++row) {

size_t idx = row*NUM_COLUMNS;

a[row] = &(data[idx]);

}We save a bunch of calls to malloc by allocating all the data once, then only pointing at the address of the start of each row, assuming the wrap-around behavior we noticed in the statically sized arrays. For two-dimensional arrays, this approach can work well, but it quickly gets cumbersome as the dimensionality grows. Note that each row happens after we move by NUM_COLUMNS. That’s relevant for the next approach…

One malloc, index math

In the previous approach, we assigned pointers to positions in the data array. But what if we just cut out the middleman? The position in the data array can be computed as

size_t linear_index = row*NUM_COLUMNS + column;assuming you want to access element a[row][column]. So…let’s just do it that way:

Although it looks syntactically a little worse, this approach has several advantages. The biggest is that it generalizes easily. The formula for indexing is recursive. For a 3D array, ordered i, j, k:

size_t linear_index = k + NUM_K * (j + NUM_J * i)In other words, when you add a dimension, wrap the previous formula in parentheses, multiply by the number of elements in the new dimension, and add the index. Another advantage is that, no matter the dimension, you only have one malloc and one free. Our data is also always contiguous for the purpose of vectorization and memory locality. Finally, you gain access to the C standard library functions that work on contiguous blocks of memory, such as memset and memcpy.

One advantage of the first way over the second is that it can support “ragged” arrays where the length of a[i] might be different from the length of a[i+i], whereas this second approach cannot. But you’ll know when you’re encountering that use-case.

Cleaning it up with typedef

C provides a feature called typedef, which lets you teach it about compound types, such as an array of fixed size. If you are working with gnu compiler extensions and would like the C language to do your indexing for you, you can use it like so:

Note this trick is not in the standard and is compiler specific.

Mix and match

In fact, if you have those compiler extensions, you can use typedef as part of the malloc line. For example,

size_t num_rows = 2;

size_t num_columns = 3;

typedef int array_t[num_columns];

array_t *a = (array_t*)malloc(num_rows*sizeof(array_t))where here I’ve used the typedef keyword teach C about my 1D arrays. typedef is not strictly necessary here, but it is good practice. And it makes it much easier for a developer to read/write these compound types. Note again that this is compiler specific. above trick where you calculate the linear index always works.

On the other hand if num_columns is known at compile time but num_rows is not, then the trick always works:

#define NUM_COLUMNS 3

size_t num_rows = 2;

typedef int array_t[NUM_COLUMNS];

array_t *a = (array_t*)malloc(num_rows*sizeof(array_t))Here you are essentially creating a dynamically sized array of statically sized arrays.

Caveats

Software engineering, like all engineering is a matter of trade-offs. C and C++ are used in a wide variety of applications. Your specific situation may require, e.g., random access where contiguous memory isn’t important or is undesirable. I wrote this from my perspective, which is focused on high-performance computing. Your mileage may vary. If you ask anyone how to solve a programming problem, or what design paradigm to use, the answer is always “it depends.”

Summary

In this meandering tutorial, we’ve discussed pointers, arrays, and how they relate to each other. We’ve also touched on some of the more bizarre behavior of C as a language and how that can be abused. We finished by showing the right way to do multi-dimensional, dynamically sized arrays in C, rather than the incorrect but frequently taught approach. I hope this has been useful! If you’d like to see more posts like this that look into programming paradigms, let me know!

A proper way to create a dynamic 2D array in modern C is using a pointer to a VLA:

`int (*arr)[NUM_COLS] = calloc(NUM_ROWS, sizeof *arr);`

I missed this for sure. I’ve edited the article to include it. Thanks!